Final Report

Video

Project Summary

The goal of this project is to teach an AI to fight zombies in the Minecraft environment. In our project the agent gets spawened in a room with some variable amount of zombies. Then the agent then needs to survive and kill all of the zombies before time runs out.

While our original goal was to learn to fight different combinations of hostile mobs at a time, after discussing with the professor and due to time constraints we have scaled down our project goals to just fighting zombies. Having multiple zombies coming from diffrent directions means that the agent has to decide which one to deal with first as well as if it should move towards or away from the zombies. As the number of zombies it’s facing grows it starts being a problem that is difficult even for a human agent to attept. We are currently using Reinforcement leaning algorithms with help of a neural networks in order to learn which actions the agent should take at the current time in order to get the most reward, which is our case was to kill more zombies and not die.

Approaches

-

Malmo Environment

We needed an environment for our train our and agent and test its ability to fight zombies. We did not want our agent to just learn to run away so we made a closed cage for our arena.

Here’s some more details about our environment:

-

We used the

<FlatWorldGenerator>and the<DrawingDecorator>to generate our map. -

We set the initial time to night and froze the time, because Zombies catch fire during day and die.

-

Used glowstone for the walls so we have enough light to see what’s going on.

-

Made our Mission XML customizable in terms of Arena size and number of spawned Zombies.

-

Made a top view UI (HUD) of the map with locations our our agents and zombies.

-

Enchanted our agents sword to do more damage.

Our Malmo environment is similar for each of our approaches.

-

-

Learning Related Definitions

We used Reinforcement learning as our learning approach for our agent. As usuall for reinforcement learning we assumed Markov property for our environment, i.e. the best action to take only dependent on our current state and not the history of what happened before.

States

We had two different approaches from our status report and our final repot. We used different ways to model our states because we used different models to approximate our Q-value estimations. For more detail mathematical representation of states look at part 3.

Actions

For our actions, we used continuous movement. We allow our agent to move front and backward and turn around, but did not let the zombie look up and down. Overall our agent had the following actions:

- Attack (Always on by default)

- Start turning right

- Start turning left

- Stop turning

- Start moving forward

- Start moving backward

- Stop moving

At each step, our agent had a choice of these actions.

Rewards

Here’s how we awarded the rewards to our agent.

- Small negative reward base on time ( per Tick)

- Big positive reward for killing each zombie ( per zombie)

- Negative reward for each damage taken (average per hit)

Algorithm and Equations

We used the standard -step SARSA algorithm to calcuate our Q-Estimates, and we used -greedy policy to let our agent both take good decision and sometimes try different things.

represents the expected total reward if we are in state and take action . This helps us to decide which actions to take, in each step we just take the action with maximum expected reward, or choose randomly.

Here’s the equation for calculating the target rewards in the SARSA algorithm.

In the equation below, is the terminal time, is the time whose estimate we are updating, and is number of states backward we look into for the update. Also is the discount factor.

- We are in a terminating state.

- We are not in a terminating state: then we use our Q(s,a) to estimate how much more reward are going to get and we add it to the value from above forumula:

Then we use the target value to update our Q estimates. In general we follow the following equation:

However, since number of possible states in our case is too high we cannot use simple approaches like Dynamic Programming to store all the values. So we are in need of ways to approximate our Q estimates. So we are basically approximating something that was also an approximation. We used two different supervised learning models to do the approximations, as you see in the next part of report.

-

Q-Estimate Approximation models

-

Linear Model using Stochastic Gradient Descent

During our status report we made a linear model for out approximation that was only good for a single zombie. The downsides of this approach was that it was unable to deal with a variable number of zombies. Also since we used angles relative to zombies a linear model had hard time distingushing 180 degree and -180 degrees. Technically both this angles point to the same direction and if the zombie was on there it would confuse our linear model since there is discontinuty there.

The way we featurized our state was as a function of State and Action. The size of the vector is twice the number of available actions (3 actions so far). Each actions has two corresponding elements in the vector, and those numbers are zeros if we are not taking that action, and for the action we are taking the first number is the distance to the zombie and the second number is relative angle of us to the zombie. For example if the distance to zombie is 10 and the relative angle is 45 degrees and we take the first action the feature vector becomes:

-

“Deep” Neural Network

For the final submission we used a neural network to make decisions for the actions to take. The reward were similar. The only difference was the feature vector we used as input of our model. In this case we had a maxmium number of allowed zombies and our feature vector had size twice that value. Then our feature vector had distance and relaive angle to each zombie, putting the closer zombies first. For example if we had 3 zombies maximum and two zombies were at distance 1, 2 and angle 45 and 145 respectively we got the following feature vector. We put high distance for zombies that either died or did not exists.

The advantage of this approach was that it enabled the agent to deal with multiple diffrent zombies at once as well as learn to deal with issues like the flip betwean postive and negetive angle in some places in the rotation. This approach enabled the agent to learn more complicated things leading it to fight multiple zombies farily well.

The input for our neutal network was the feature vector for the state and it gave the Q estimate for all possible actions. Then we could use the estimates and take the action with maximum Q-estimate or act randomly (since we were using -greedy policy).

Since training neural networks are expensive computations, instead of training the neural network right away. We stored the states and target values in a queue and then batch updated our neural network between episodes. We used all the observations from previous episode for our training (short term memory of agent). Also as suggested in recent literture we also kept observations from older episodes (basically long term memory of agent)and took a small random sample of those for our training.

The only downside with neural networks is that they are much harder to setup and debug and use properly. Also they are really data hungry and need much more episodes to be trained.

-

-

Training

We trained our agent on both our models, also on a on random moving agent for comparison. Each time we let the agent run for hundreds of episodes. Each round of training took several hours.

Evaluation

Quantitative

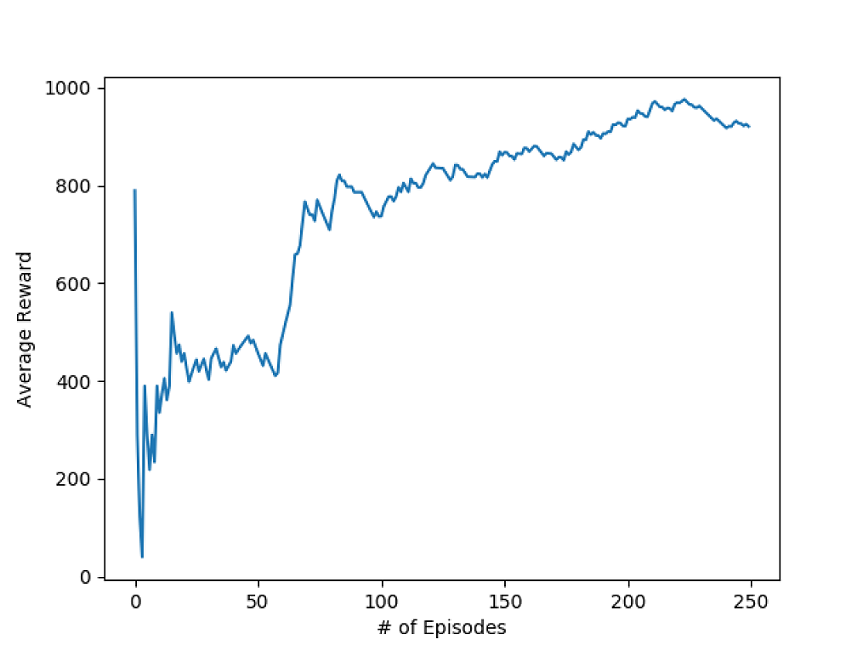

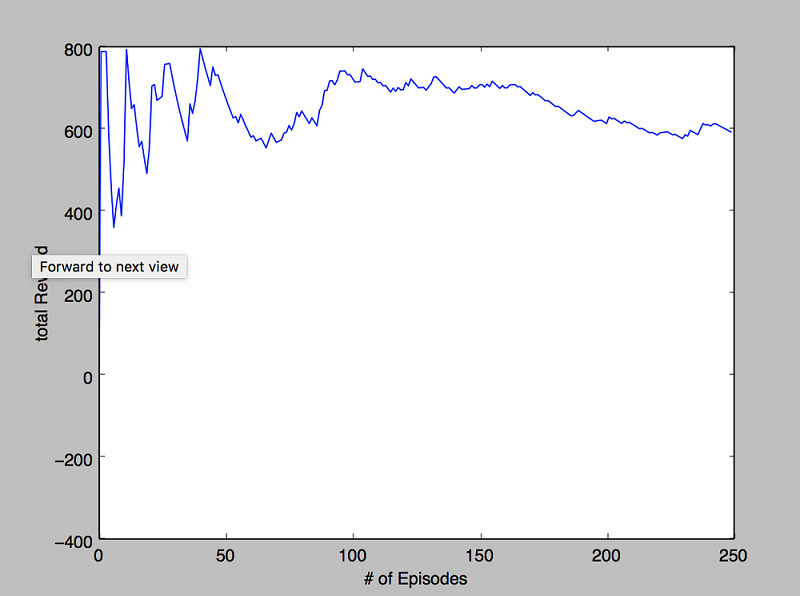

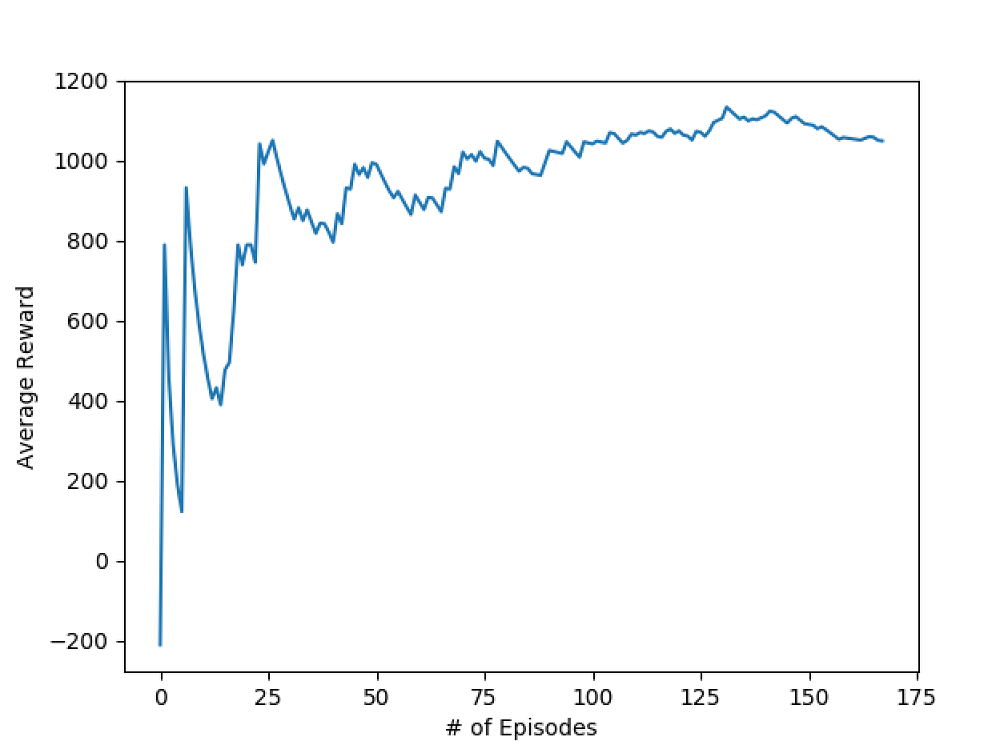

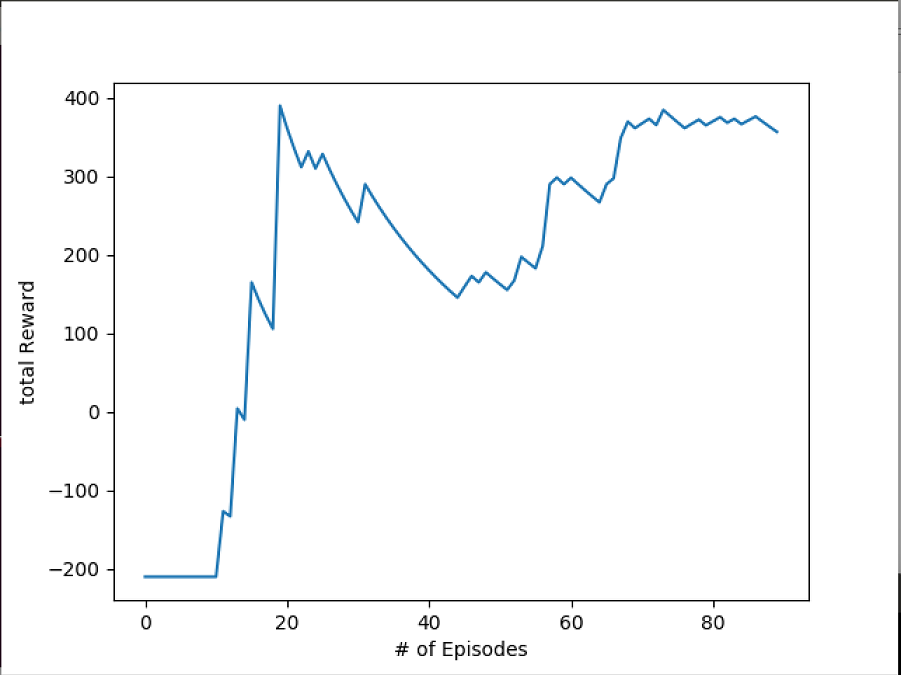

As you can see from the graphs below there the agent improved over time at killing the zomibes. The graphs below show the average reward over time. We tried our agent in different settings and with different number or zombies. Also we compared performance of our agent with a completely random agent. As we see our agent is doing better on average as more episodes its trained on, and is significantly doing better than the random counterpart.

This part focuses on achievements of our agent that used the neural network, to see performance of our linear model (which was limited to one zombie), you can look at status report.

4 Zombies

In this part we trained our agent on 4 zombies and as you see the agent is doing better over time as we train on more episodes. We kept track of the running average of the rewards agent got.This graph shows episode vs average total reward with 4 zombies. As you can see in this graph the average total reward goes up significantly over time showing that the agent is infact learning to become better. It seems the agent still can learn more if trained on more episodes but training takes lots of time.

In comparison this is the same graph of episodes vs average total reward with 4 zombies where epsilon is set to 1 so all choices are random. You can see that there is no increase in average reward overtime and that the random AI is clearly performing much worst.

8 zombies

As you can see in the graph below is for 8 zombies, in this case the learning is not significant, but task of killing 8 zombies was very hard even for a human agents. Again, it performs much better than random agent.

This is graph with 8 zombies and random choices.

Qualitative

Watching the AI fight against 4 zombies you can clearly see that in the begining he is moving mostly randomly and the Zombies normaly kill him pretty quickly. However After a few hundered episodes run the agent gets much better and occasionally beats all four zombies fairly. Also in case of 8 zombies its even hard for a human player to do much since before you know it you are hit by 8 zombies and you are dead.

References

Papers and articles refrenced

First Steps With Neural Nets in Keras

Demystifying Deep Reinforcement Learning

Reinforcement Learning Code examples

Features we requested for malmo

Get reward for dealing damadge to mobs

Libraries