Status

Summary:

While our original goal was to learn to fight different combinations of hostile mobs at a time, after discussing with the professor and due to time constraints we have scaled down our project goals to just fighting zombies. Currently, we are learning to turn towards a single zombie to attack it. Our future goals involve being able to move around the arena while simultaneously combating multiple zombies.

Currently, we use n-step Sarsa algorithm to update our Q estimates. Also our agent uses epsilon greedy algorithm to choose which actions to take based on our estimates. Since number of different states is really high, we use a feature vector to summarize our states and then we use a Stocahstic Gradeint Descent when updating the estimates.

Approach:

To have a working prototype for the Progress report we made some simplifications to our original goal, more specifically we only place one zombie in a room of a fixed size and limit the possible actions our agent can make to “Turn Right”, “Turn Left”, and “Stop Turning”. That way our agent only needs to learn which way to turn in order to hit the zombie. Since we were originally were unable to give the agent a reward for damaging a zombie we gave the agent a diamond sword with strong enchantments on it so that it can kill a zombie in one hit.

We are using reinforcement learning, and hence we need to define our MDP. For the progress report our states are consisted of the distance to the zombie and the angle the zombie resides relative to our yaw. And the actions are “Turn Right”, “Turn Left”, “Stop Turning”. We need the “stop turning” action since we are using continuous movement. Turn right means start turning right until told otherwise, and similarly for other two actions. Rewards are as follows:

- Small negative reward base on time ( per Tick)

- Big positive reward for killing zombie ( per zombie)

- Negative reward for each damage taken (average per hit)

Here’s the equations used for n-step Sarsa algorithm. being the terminal time. being the time whose estimate we are updating. is number of states backward we look into for the update.

And if we are not at a terminating state we use our estimates of how much more reward we can get. More specifically we make the following change:

Also we use the standard n-step Sarsa equation to update our Q-estimate.

However since number of our states is too high, we use a linear model of a feature vector of our states and use Stochastic Gradeint Descent to update the estimate(we use scikit-learn’s library). So on the right hand side of the equation is computed using predict function, and the left hand side is updated using the partial_fit function.

The way we featurized our state was as a function of State and Action. The size of the vector is twice the number of available actions (3 actions so far). Each actions has two corresponding elements in the vector, and those numbers are zeros if we are not taking that action, and for the action we are taking the first number is the distance to the zombie and the second number is relative angle of us to the zombie. For example if the distance to zombie is 10 and the relative angle is 45 degrees and we take the first action the feature vector becomes:

Evaluation:

Qualitative

For the qualitative approach we could look at our bot and see which way it decided to turn at each step, then we could see if this behaviour improved over time or not. More specifiacally our bot should turn in the direction of the zombie to be able to hit it. By looking over our bot over several number of epiosdes and several different runs, by only looking at the behaviour of the bot we could see that its doing better and killing the zombie more often.

Quantitative

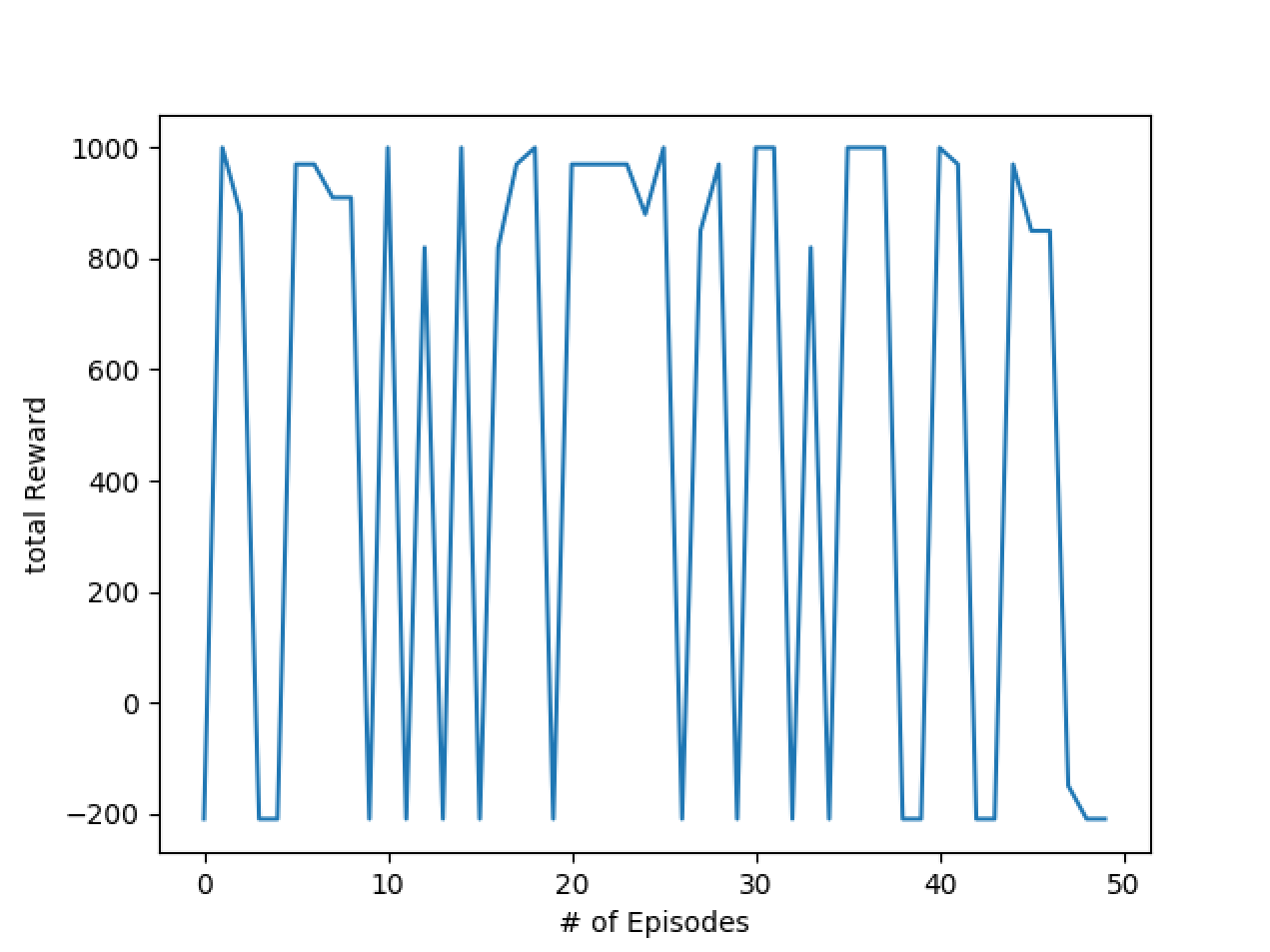

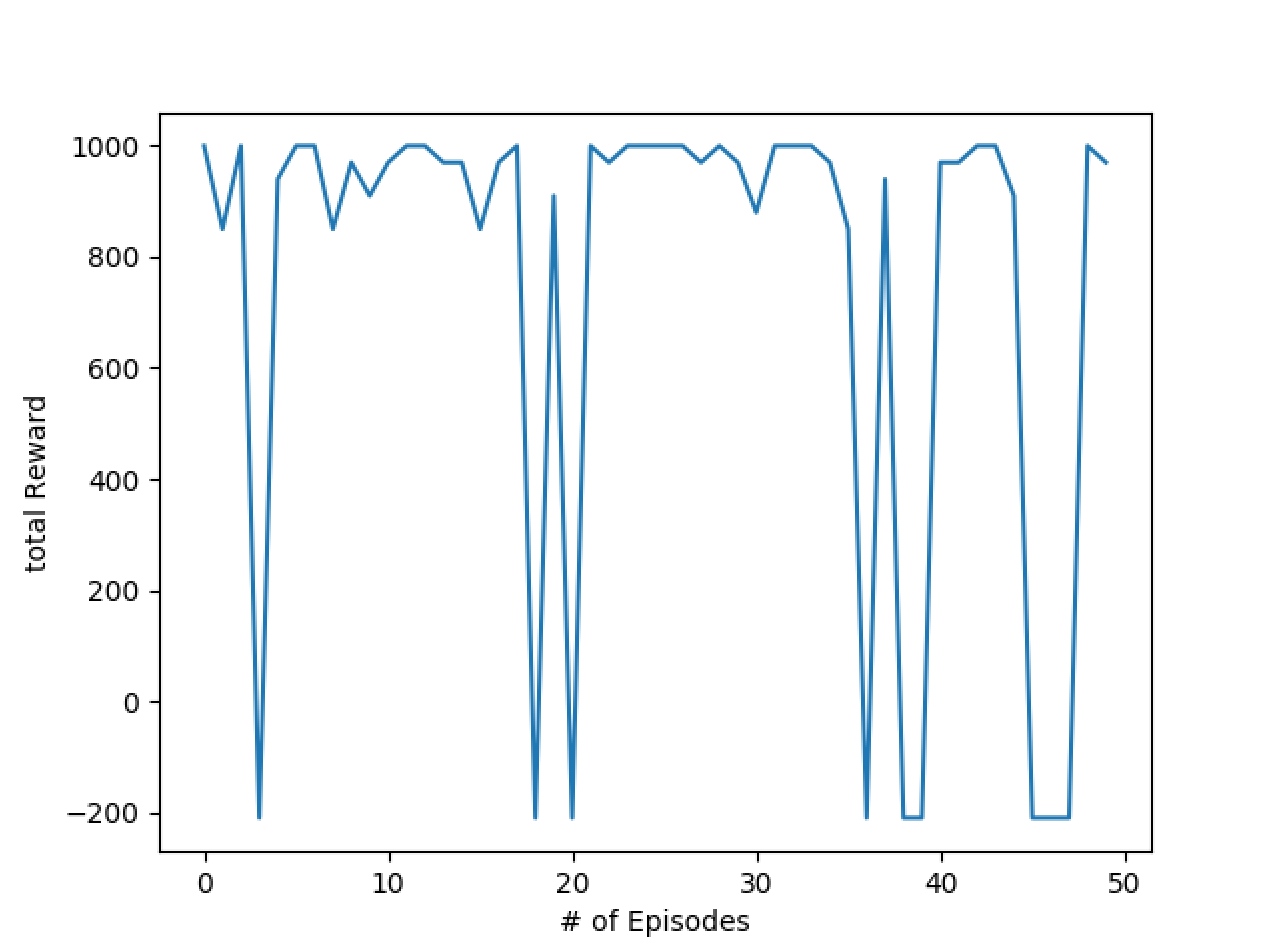

For the quantitative approach we recorded rewards we got for each episode and plotted it. To able to actually see if our bot is learning or not, we also ran our bot with actions completely randomized (i.e. ). After comparing the two graphs is easy to see that our Zombie-Hunter bot does much better than the random one, hence there is some learning happening here. But there still should be room for improvements and might need to handle more edge cases that might arise.

Performance of random bot:

Performance of Zombie-Hunter:

Remaining Goals and Challenges:

Currently our mob is capable of learning to turn so that it hits the zombie running towards it. We are hoping that in the next 2-4 weeks, we are able to do the following:

-

Our agent to be able to move around the arena, so our actions won’t be only limted to turning or not turning

-

Our agent be able to handle multiple zombies at the same time, this means we need to use more suffisticated models like DQNs for estimating our values.

-

Our agent to be able learn to kill zombies after we take the enchanted sword away (i.e. more than one hit is required to kill a zombie)

-

We talked to Malmo developers on Github and they added the functionally to have other entities health in observations. (Link to discussion) So we can use that to detect if we hit the a Zombie or not. However, we did not add this to current report since the new feature is not in the Malmo releases yet and we need to build from source if we want to use it.

Challenges

Most of the challenges we had was due to Malmo rather than the reinforcement learning algortihms, for example occasionally the zombie did not respawn at all. Also navigating the malmo documentation and finding what we needed was something that took a considerable amount of time.